Anthropic benchmarked its latest AI model using Pokemon

An unusual twist is that Anthropic used Pokémon to test their most recent AI model. You read that right—Pokémon!

In a blog entry that dropped on Monday, Anthropic spilled that the renowned Game Boy legend, Pokémon Red, became the testing ground for their newest creation, Claude 3.7 Sonnet. Fun fact: they geared this model with the ability to remember, perceive screen pixels, and execute function calls to push buttons and navigate across the screen. This magic recipe allowed it to keep playing Pokémon endlessly.

A striking characteristic of Claude 3.7 Sonnet is its knack for "extended thinking." It's in the league of OpenAI’s o3-mini and DeepSeek’s R1. It is capable of devoting more computing power — and more importantly, more time — to mull over tricky issues.

That turned out to be a huge asset in the Pokémon Red adventure.

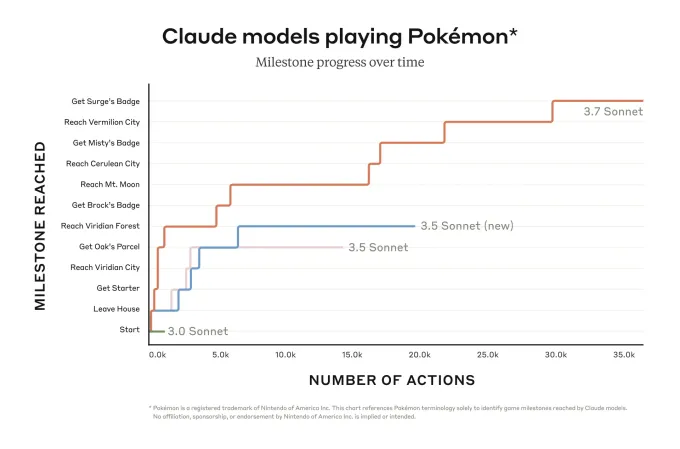

In a stellar performance, Claude 3.7 Sonnet eclipsed its predecessor, Claude 3.0 Sonnet, which struggled to leave the house in Pallet Town, right where the saga kicks off. The improved model locked horns with three Pokémon gym leaders, and came back with their badges!

Image Source:Anthropic

But here's the catch: we're still in the dark about the amount of computing it took for Claude 3.7 Sonnet to scale these heights—or how long it took.

But don't worry — someone out there is probably already on the case.

While Pokémon Red primarily serves as a playful testing ground, remember that games have played a significant role in AI benchmarking for a considerable period. Recently, a flurry of new apps and platforms emerged to probe AI models' gaming skills on diverse titles, from Street Fighter to Pictionary.